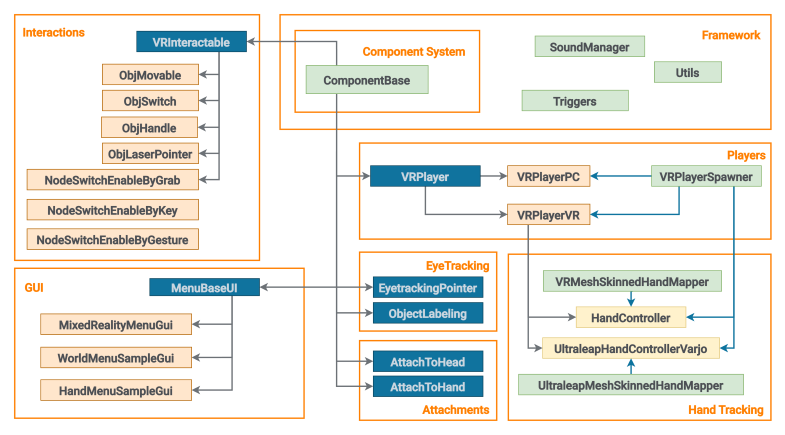

Classes and Components Overview

When you open your project based on the VR Sample for the first time and see a lot of different classes, you might get confused a bit.

In this article, we'll take a closer look at how the VR Sample is organized, what each major class does, and how they work together, so you can better understand the system and start building on top of it with confidence.

VRPlayer Class#

This is a base class for all players. It contains declaration of baseline controls, common basic player operations, event management etc.

The following component classes are inherited from the VRPlayer:

Hand Tracking#

Two specialized components extend VRPlayerVR class to support natural hand tracking. Only one can be active at a time, depending on the system configuration:

| HandController | Inherited from VRPlayerVR and utilizes OpenXR's built-in hand tracking hand tracking API, if initialized and Ultraleap is not in use. It processes joint data to detect pinch and grab gestures, enabling natural interaction without physical controllers. |

|---|---|

| UltraleapHandControllerVarjo | Also inherited from VRPlayerVR and is available when the Varjo backend is active (-vr_app varjo) and Ultraleap plugin is loaded. It integrates directly with the Ultraleap SDK to provide detailed skeletal tracking and gesture interaction. |

To animate hand models, two corresponding mappers are used:

| VRMeshSkinnedHandMapper | Maps OpenXR joint data to skinned hand meshes. |

|---|---|

| UltraleapMeshSkinnedHandMapper | Applies Ultraleap skeletal data to the mesh. |

For more details, see the article: Hand Tracking.

Eye Tracking#

Eye tracking enables interaction based on the user's gaze direction. It is supported in the VRPlayerVR class and can be used for gaze-based object selection and labeling.

| EyetrackingPointer | This component calculates the gaze direction from the player's head position towards the focus point returned by the eye tracking system. It performs a raycast in that direction and identifies the object being looked at. |

|---|---|

| ObjectLabeling | Displays the name of the currently gazed object using a text label positioned near the hit point. The label follows the gaze and updates dynamically as the user looks at different objects. |

Eye tracking is active only if supported by the hardware and both VREyeTracking::isInitialized() and VREyeTracking::isValid() are true.

VRPlayerSpawner Class#

This class is responsible for instantiating and registering all VRPlayer components within the Component System. During initialization, it performs runtime checks to determine the current VR configuration and selects the appropriate player controller accordingly:

- If VR is initialized and a HMD is connected, it spawns a VRPlayerVR with all necessary components for full VR interaction.

- If the application is running with the Varjo backend and the Ultraleap plugin is available, it adds UltraleapHandControllerVarjo for external hand tracking.

- If OpenXR hand tracking is available and Ultraleap is not used, it adds HandController.

- If VR is not initialized, a VRPlayerPC is spawned instead, providing basic desktop control for testing or fallback usage.

Interaction-Related Classes#

VRInteractable is a base class for all objects that you can interact with. It defines a basic set of interactions, in other words: here you define what can a user do with your object. You can add your own type of interaction here.

The following component classes are inherited from the VRInteractable:

The following components also toggle the enabled state of assigned nodes. However, unlike other interaction components, they do not inerit from VRInteractable.

| NodeSwitchEnableByGesture | Toggles node state when a specific hand gesture is detected. |

|---|---|

| NodeSwitchEnableByKey | Toggles node state when a specific controller's key is pressed. |

Object Attachment#

These components attach objects to the VR player's hands or head, ensuring consistent relative positioning.

| AttachToHand | Attaches an object to the player's hand in VR. It supports both automatic alignment using custom position and rotation, or preserving the current transform. The node is re-parented to the hand controller node (left or right) and aligned with a predefined basis correction for controller orientation. |

|---|---|

| AttachToHead | Places an object in front of the player's head in VR and optionally updates its position every frame. It allows specifying orientation relative to a chosen axis and controlling whether the position remains fixed or dynamic based on the player’s gaze direction. |

MenuBaseGUI Class#

This is a base class for all graphic user interfaces (GUI).

The following component classes are inherited from the MenuBaseGUI:

Framework#

Framework includes the Component System which implements the core functionality of components and a set of utility classes and functions used for playing sounds, auxiliary math and 3d math functions, callback system implementation.

Triggers Class#

Triggers is a framework class used to mark room obstacles for the VR Player (e.g. room walls, objects, etc.) and give a warning if there is an obstacle on the way (as the player gets closer to an obstacle, controllers' vibration becomes more intense).

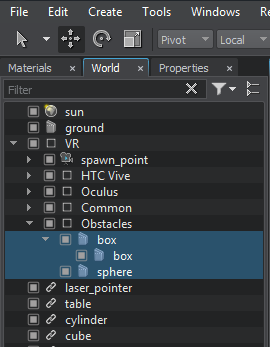

You can simply create primitives for walls and objects in your room and add them as children to the node dummy named Obstacles, which is a child of the VR dummy node (see the hierarchy in the Editor below).

All children of the Obstacles node will be automatically switched to invisible mode and will be used only to inform the player and prevent collisions with objects in the real room.

Utils#

This class provide a utility module with a wide range of helper functions commonly used in VR applications for working with 3D math, object transforms, geometry, visualization, and world management.

Sound Manager Class#

The SoundManager class provides centralized management of audio playback in the scene. It supports one-shot and looped 3D sounds, grouped sound variants, volume control, and performance optimization through sound reuse.

Key Features:

| One-shot sounds | Use playSound() to play positional, non-looping sound effects (e.g. impact sounds). |

|---|---|

| Looped sounds | Use playLoopSound() for continuous sounds (e.g. ambient noises). These automatically stop after a specified duration. |

| Sound grouping | Similar sound files can be grouped and randomly selected for variety using addSoundGroup(). |

| Volume control | Global volume can be adjusted with setVolume(). Separate control is available for sound and music channels. |

| Sound warming | Preloads all sound groups into memory using warmAllSounds() to minimize delays during playback. |

The information on this page is valid for UNIGINE 2.20 SDK.